Following its second quarterly Community Guidelines Enforcement Report, YouTube shows how its efforts to flag and remove offensive content are paying off.

YouTube is expanding its Community Guidelines Enforcement Report to include additional data like channel removals, the number of comments removed and the policy reason for a video or channel removal. The results show that YouTube is getting faster at flagging and removing content, and tackling comments.

YouTube has always used a “mix of human reviewers and technology to address violative content” on its platform, and over a year ago it started to use better machine learning to flag content for review. The combination of the two has allowed YouTube’s teams to enforce its policies a lot faster. As YouTube explains in a recent announcement, “finding all violative content on YouTube is an immense challenge, but we see this as one of our core responsibilities and are focused on continuously working towards removing this content before it is widely viewed.”

Its efforts are paying off:

- From July to September 2018, its teams removed 7.8 million videos

- 81% of these videos were first detected by machines

- Of those detected by machines, 74.5% of videos had never received a single view

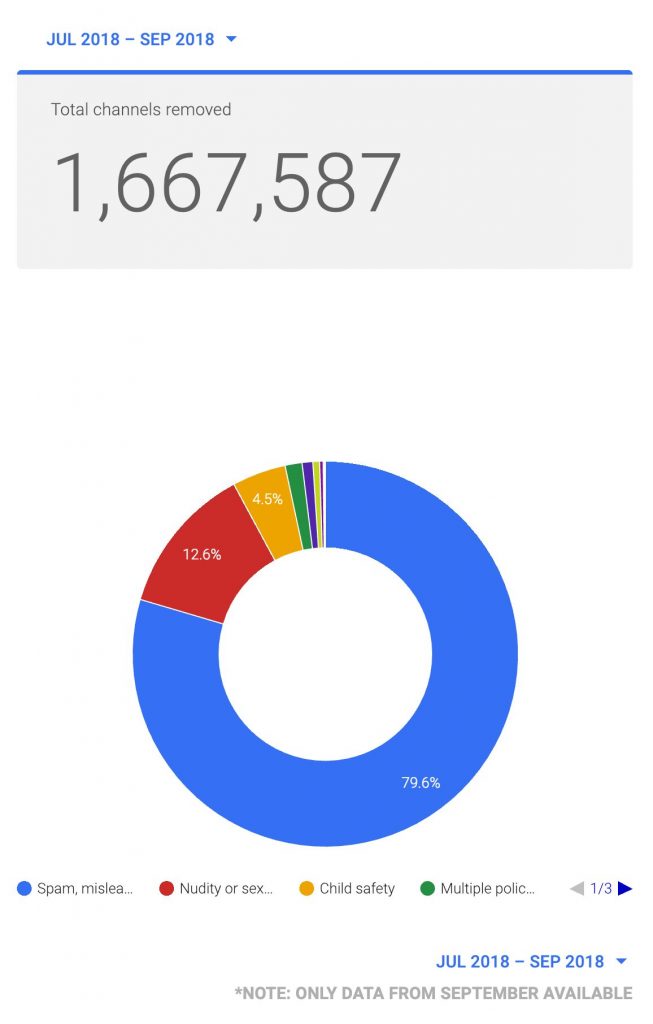

When YouTube detects a video that violates its Guidelines, it removes the video and applies a strike to the channel. It will then “terminate entire channels if they are dedicated to posting content” that is prohibited or that “contain a single egregious violation, like child sexual exploitation.” YouTube admits that “the vast majority of attempted abuse comes from bad actors trying to upload spam or adult content: over 90% of the channels and over 80% of the videos that we removed in September 2018 were removed for violating our policies on spam or adult content.”

Furthermore, when looking at “the most egregious, but low-volume areas, like violent extremism and child safety,” YouTube says its “significant investment in fighting this type of content is having an impact,” with “over 90% of the videos uploaded in September 2018 and removed for Violent Extremism or Child Safety had fewer than 10 views.”

In addition to removing offensive content, YouTube has also worked very hard on making comments on its platform safer. The company uses the same combination of “smart detection technology and human reviewers to flag, review, and remove spam, hate speech, and other abuse in comments.” It has also added tools to allow creators to be able to moderate comments on their videos effectively. There are now over one million creators who use these tools to moderate their channel’s comments.

Following an increase in enforcement against “violative comments,”

- From July to September of 2018, YouTube’s teams removed over 224 million comments for violating its Community Guidelines.

- The majority of those removals were for spam, while the “total number of removals” is only a small part of the billions of comments posted on its platform.

One might think that this enforcement would lead to the comments ecosystem to shrink. According to YouTube, this is not the case. In fact, the platform’s daily users are 11% more likely to comment than they were last year.

[box]Read next: YouTube Stories Are Now Rolling Out To All Eligible Creators[/box]