As online safety for minors remains a global pressure point, platforms are tightening the screws on how young users experience their products. The latest move comes from YouTube, which is rolling out new parental controls aimed squarely at one of its most addictive formats: Shorts.

Announced this week, the update gives parents far more control over how, and how much, their children and teens consume short-form video.

Parents can now limit (or block) Shorts entirely

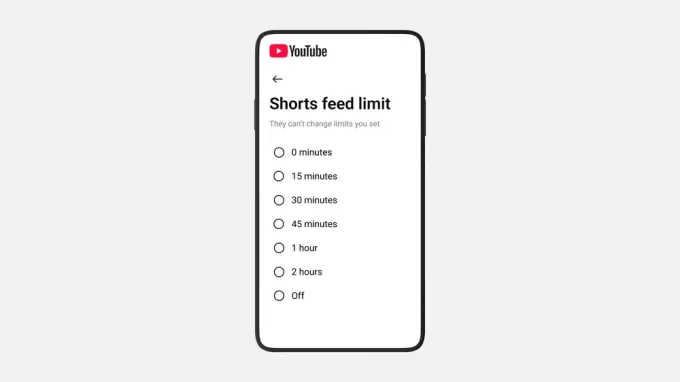

At the center of the update is a new Shorts-specific timer. Parents with supervised accounts will be able to set a daily limit on how long their child can spend watching Shorts, YouTube’s answer to TikTok and Reels.

More notably, Shorts can now be blocked altogether. Parents can turn the format off permanently, or temporarily, for example when a child is meant to be using YouTube strictly for schoolwork or educational content.

It’s a clear acknowledgment of what everyone already knows: infinite scroll plus short-form video equals time evaporating at record speed.

Bedtime and “Take a Break” reminders get more flexible

YouTube is also expanding its existing wellbeing tools. Parents can now set custom Bedtime and Take a Break reminders for kids and teens, nudging them to step away from the screen.

These features aren’t just for families either. Adult users can also opt in and set their own limits, a quiet reminder that the Shorts rabbit hole doesn’t discriminate by age.

Anyone who’s ever handed a phone to a child knows the risk: one wrong tap, and your carefully trained algorithm is suddenly filled with cartoons and kids’ shows.

To address that, YouTube says it will soon make it easier to toggle between adult and child accounts inside the app, reducing friction when sharing devices, though it still relies on users remembering to actually switch profiles.

These updates build on YouTube’s existing teen supervision tools, including the ability for parents to monitor channel activity if a teen is creating content. They also align YouTube with broader industry standards already adopted by platforms like TikTok and Instagram, which have introduced similar parental controls under increasing regulatory and cultural pressure.

Last year, YouTube also introduced age-estimation technology, designed to infer whether an account likely belongs to a teen and automatically adjust the experience accordingly, even if the user didn’t self-identify their age.