OpenAI is formalizing something millions of people are already doing: talking to ChatGPT about their health.

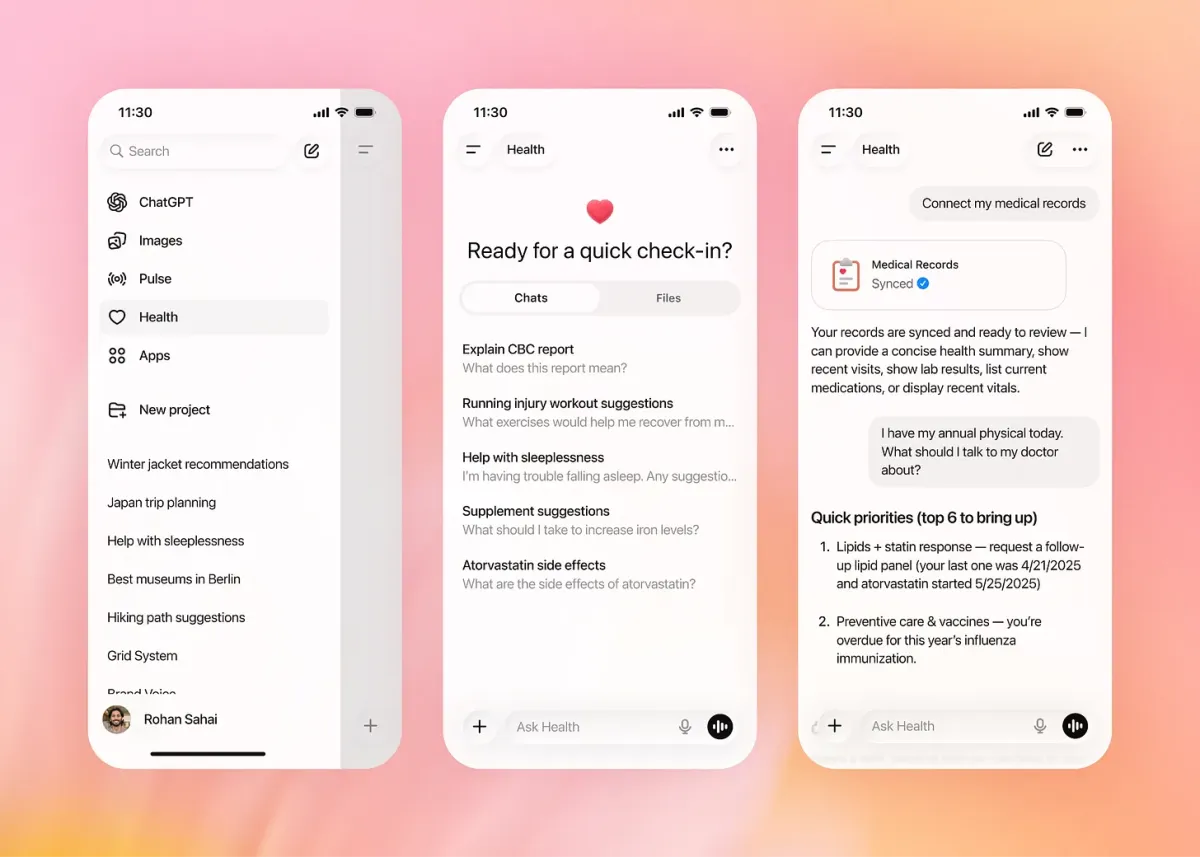

This week, the company unveiled ChatGPT Health, a new, dedicated space inside ChatGPT designed specifically for health and wellness conversations. According to OpenAI, more than 230 million users ask health-related questions every week — from fitness goals to symptoms and mental wellbeing, and this new experience is meant to make those conversations more intentional, private, and better structured.

The key change isn’t what people can ask, but where those conversations live.

Health chats are siloed away from your standard ChatGPT threads, meaning personal medical context won’t accidentally surface in unrelated conversations. If you start discussing health topics outside of the Health section, ChatGPT will gently prompt you to move the conversation there instead.

That separation signals a growing awareness around context, privacy, and user comfort, especially when it comes to sensitive information.

Interestingly, ChatGPT Health won’t exist in total isolation. The assistant can still reference relevant context from your broader usage when it’s helpful. For example, if you’ve previously talked about training for a marathon, ChatGPT Health may already understand that you’re a runner when discussing fitness or recovery goals.

The product will also support integrations with wellness and health platforms like Apple Health, Function Health, and MyFitnessPal, allowing users to bring personal data into the conversation if they choose.

OpenAI emphasized that Health conversations will not be used to train its models, a notable reassurance given ongoing concerns around data usage and AI.

In a blog post, Fidji Simo framed ChatGPT Health as a response to real-world healthcare challenges: rising costs, limited access, overbooked doctors, and fragmented care experiences. The pitch is clear, AI isn’t meant to replace doctors, but it can help people think through questions, prepare for appointments, and better understand their own health journeys.

That said, OpenAI is careful to underline the boundaries. Large language models don’t “know” what’s true, they predict likely responses, which means hallucinations and inaccuracies are still a risk. In its own terms, OpenAI states that ChatGPT is not intended for diagnosing or treating medical conditions.

ChatGPT Health sits in a delicate middle ground: more structured and privacy-aware than casual chats, but still very much an informational and supportive tool, not a medical authority.

The launch of ChatGPT Health feels less like a radical new feature and more like an acknowledgment of reality: people are already turning to AI for health guidance. By creating a dedicated space, OpenAI is trying to make that behavior safer, clearer, and more responsibly framed.

The feature is expected to roll out in the coming weeks.