Facebook announced a new toolkit to help gaming creators build more positive, supportive, and inclusive communities on its platform.

Facebook’s Community Standards are there to protect its users against some of the most serious issues that can affect them – i.e., hate speech, racist rhetoric, or terrorism – but sometimes someone being rude is all it takes to disrupt a conversation. Gamers are a special case, with some streaming communities taking offense to certain behaviors, while others don’t.

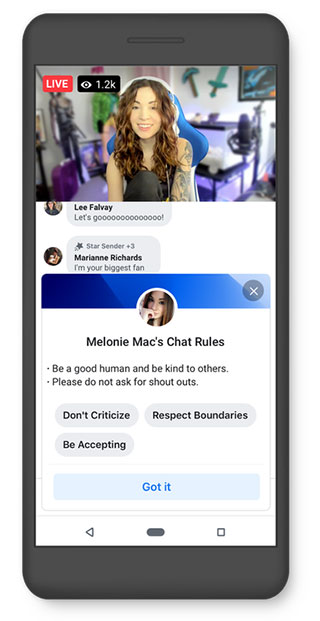

To help gaming creators set the tone in their individual communities, Facebook is testing out a new toolkit that brings preset rules and makes chat rules more visible and obvious.

To build the toolkit and establish the preset rules and guidelines, Facebook worked with the Fair Play Alliance and partnered directly with its Executive Steering Committee.

“People form communities over a shared love of gaming, but we know some groups of people, like women, can be targets of negative, hurtful stereotypes — so, rules like ‘Be Accepting’ and ‘Respect Boundaries’ can help maintain a positive environment for everyone, regardless of race, ethnicity, sexual orientation, gender identity or ability,” explains Clara Siegel, Facebook Gaming Product Manager.

“Similarly, ‘Don’t Criticize’ can help newer players feel welcome. The rules will promote inclusion and respect to help people feel safe sharing their voice.”

To use the toolkit rules, there will be a special Chat Rules button in the streamer dashboard, while more preset rules will be available at a later date based on feedback from creators. Facebook is testing the rules with a “small group of creators” and promises they will roll out “globally in the coming months.”

The toolkit offers four new features:

Clear Standards: Creators choose the Chat Rules and add a custom description to set expectations before they even go live. When fans want to leave a comment in the chat, they need first to accept the rules that have been set.

Content Removals: Comment removals now happen in real-time, as soon as a comment is removed or the user who wrote it is banned.

Transparency in Moderation: Moderators now can select which rules were violated, to let fans know why their content was removed. Facebook hopes that “this level of transparency will help creators educate their audience and inform well-intentioned fans who may have inadvertently broken a rule.”

Moderation Dashboard: Moderators will also now be able to access a new moderation dashboard full of resources to prevent harassment, protect their privacy, and help ensure creators feel safe.

The new toolkit still allows both creators and moderators to remove comments, mute viewers for a short period, or ban users outright. However, once a user is banned, they can still watch a stream but can’t comment or react. All of their previous comments will also be removed.

[box]Read next: Facebook’s ‘Clear History’ Is Now Available To Everyone[/box]